In the final

module of this course, Module 6, we will explore the topic of geometries. This

includes learning how to interpret the properties of geometry objects derived

from existing features, as well as the process of creating new geometry objects

based on coordinate information.

Features are objects that are depicted on a map or within a dataset. A point represents a single location like a fire hydrant or a tree; a polyline represents a linear feature such as a road, river, and a polygon feature that represents an area or region, like a lake, park, or administrative boundary. Each feature in a data set is typically represented by a row in an attribute table.

A point feature class in GIS represents a single location consists of a single vertex defined by x,y coordinates. On the other hand, polyline and polygons features consist of multiple vertices and are constructed using two or more points objects. These vertices define the shape of a polyline or polygon feature.

To effectively work with geometry objects, it is essential to establish a cursor on the geometry field. Tokens such as SHAPE@ provide access to the complete geometry object; however, in the context of a large dataset, this approach may lead to slower performance. For scenarios where only specific properties are required, alternative tokens can be utilized. For instance, SHAPE@XY returns a tuple of x and y coordinates that represent the centroid of the feature, while SHAPE@LENGTH provides the length of the feature.

ArcPy provides a variety of classes designed for working with geometry objects, including the generic arcpy.Geometry class, which is utilized to create geometry objects. In addition to this, ArcPy includes four specific geometry classes: MultiPoint, PointGeometry, Polygon, and Polyline. Furthermore, ArcPy incorporates two additional classes that facilitate the construction of geometry: Array and Point. The relevant classes include arcpy.Array() and arcpy.Point().

Certain features are composed of multiple parts but are represented as a single feature within the attribute table; these are referred to as multipart features. To determine whether a feature is classified as a single part or multipart, the isMultipart statement is utilized. Additionally, the partCount function will provide the total number of geometry parts associated with a given feature. Furthermore, polygons that contain holes present a challenge. These shapes typically feature one exterior ring, which is defined as a clockwise ring, and one or more interior rings that are defined as a counterclockwise ring.

Lastly, we explored the process of writing geometries, which encompasses the creation of new features. New features can be developed utilizing the InsertCursor class from the arcpy.da module. This process requires creating a geometry object, and then saving the result as a feature using insertRow() method.

cursor = arcpy.da.InsertCursor()

The lab assignment for Module 6 requires the development of a Python script that generates a new text file. This script should populate the file with the Object Identifier (OID) of each feature or row, the vertex ID, the X and Y coordinates of the vertices, as well as the name of the river feature derived from a feature class containing polylines representing rivers in Maui, Hawaii.

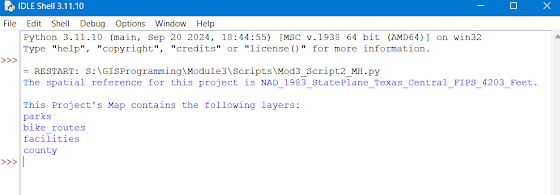

Initially, I imported all necessary modules and classes, enabled the overwriteOutput setting, configured the workspace, and defined the feature class variable. Subsequently, I created a rivers.txt file in write mode ("w") and established a search cursor for the rivers.shp file. This cursor was designed to access the OID, SHAPE, and NAME fields.

Furthermore, I implemented a for loop to iterate through each row or feature within the cursor/ShapeFile, while also creating a variable to serve as a vertex ID number. A second for loop was introduced to iterate through each point or vertex in the row, utilizing the .getPart() method to extract the x and y coordinates of the vertices, incrementing the vertex ID number with each iteration.

The output.write() method was employed to append a line to the .txt file, detailing the Feature/ROW OID, Vertex ID, X coordinate, Y coordinate, and the name of the river feature. A print statement was also included to display the output.write result. Finally, I ensured that the .txt file was properly closed and deleting row and cursor variables outside of all loops.

The execution

of the script for Module 6 generated a formatted text file corresponding to

each river feature within the Maui rivers feature class.

.png)

.png)

.png)